Nowadays, through the Large Language Model (LLM), people have seen that generative AI can achieve a language expression capability that is highly comparable to human language. AI is no longer a distant dream and can now integrate into human work and life. This has revitalized the AI field that has been stagnant for some time. Numerous practitioners are seeking the opportunity to join the next era of technological revolution.

Large models can answer general questions. However, if you want them to serve vertical professional fields, there will be insufficient depth of knowledge and timeliness problems. How can enterprises seize the opportunity and build vertical field services? At present, there are two modes. The first mode is fine-tuning, which is based on the vertical field model of the large model. This has a high comprehensive input cost, and the update frequency is low, which is not suitable for all enterprises. The second mode is to build the enterprise's knowledge assets in the vector database and build the depth services in the vertical field through large model + vector database. The essence is to use the database for prompt engineering.

Enterprise knowledge base documents and real-time information are extracted as vector features and stored in a vector database. Combined with LLM, Chatbot can provide more professional and timely answers, allowing enterprises to create their own specific Chatbots.

This article will focus on the principles and processes of building an enterprise-specific Chatbot based on LLM and vector database, as well as the core capabilities of AnalyticDB for PostgreSQL in creating this scenario.

In the real world, the vast majority of data exists in the form of unstructured data, such as pictures, audio, video, text , and more. Unstructured data have exploded with the emergence of applications such as smart cities, short videos, personalized product recommendations, and visual product searches. To process this unstructured data, we usually use artificial intelligence techniques to extract the characters of unstructured data and convert them into characteristic vectors. These vectors are then analyzed and retrieved to process the unstructured data. This type of database, which can store, analyze, and retrieve characteristic vectors, is called a vector database.

To enable fast retrieval of characteristic vectors, vector databases use technical means to construct vector indexes. The vector indexes we usually refer to belong to Approximate Nearest Neighbors Search (ANNS). The core idea is not limited to returning only the most accurate result items, but to search for data items that may be close neighbors. This means sacrificing some accuracy within an acceptable range to improve retrieval efficiency. This is the key difference between vector databases and traditional databases.

To apply the ANNS vector index more easily to the actual production environment, there are two main methods in the industry. One method is to serve the ANNS vector index separately to provide vector index creation and retrieval capabilities, thus forming a proprietary vector database. The other method is to integrate the ANNS vector index into a traditionally structured database to form a DBMS with vector retrieval functions. In actual business scenarios, proprietary vector databases often need to be used with other traditional databases, which can lead to common problems such as data redundancy, excessive data migration, and data consistency issues. Compared to real DBMS, proprietary vector databases require additional professional maintenance and additional costs and provide limited query language capabilities, programmability, extensibility, and tool integration. In contrast, DBMS with vector retrieval functions is a complete modern database platform that meets database function requirements. The integrated vector retrieval capabilities can achieve the function of a proprietary vector database and inherit the excellent abilities of DBMS, such as ease of use (directly using SQL to process vectors), transactions, high availability, high extensibility, and more.

The AnalyticDB for PostgreSQL introduced in this article is a DBMS with vector retrieval functions and all-in-one database capabilities. Before introducing the specific capabilities of AnalyticDB for PostgreSQL, let's first take a look at the creation process and related principles of Chatbots.

The LLM + AnalyticDB solution can be used to process documents, PDFs, emails, web information, and other content that has not been covered by the LLM training dataset. For example:

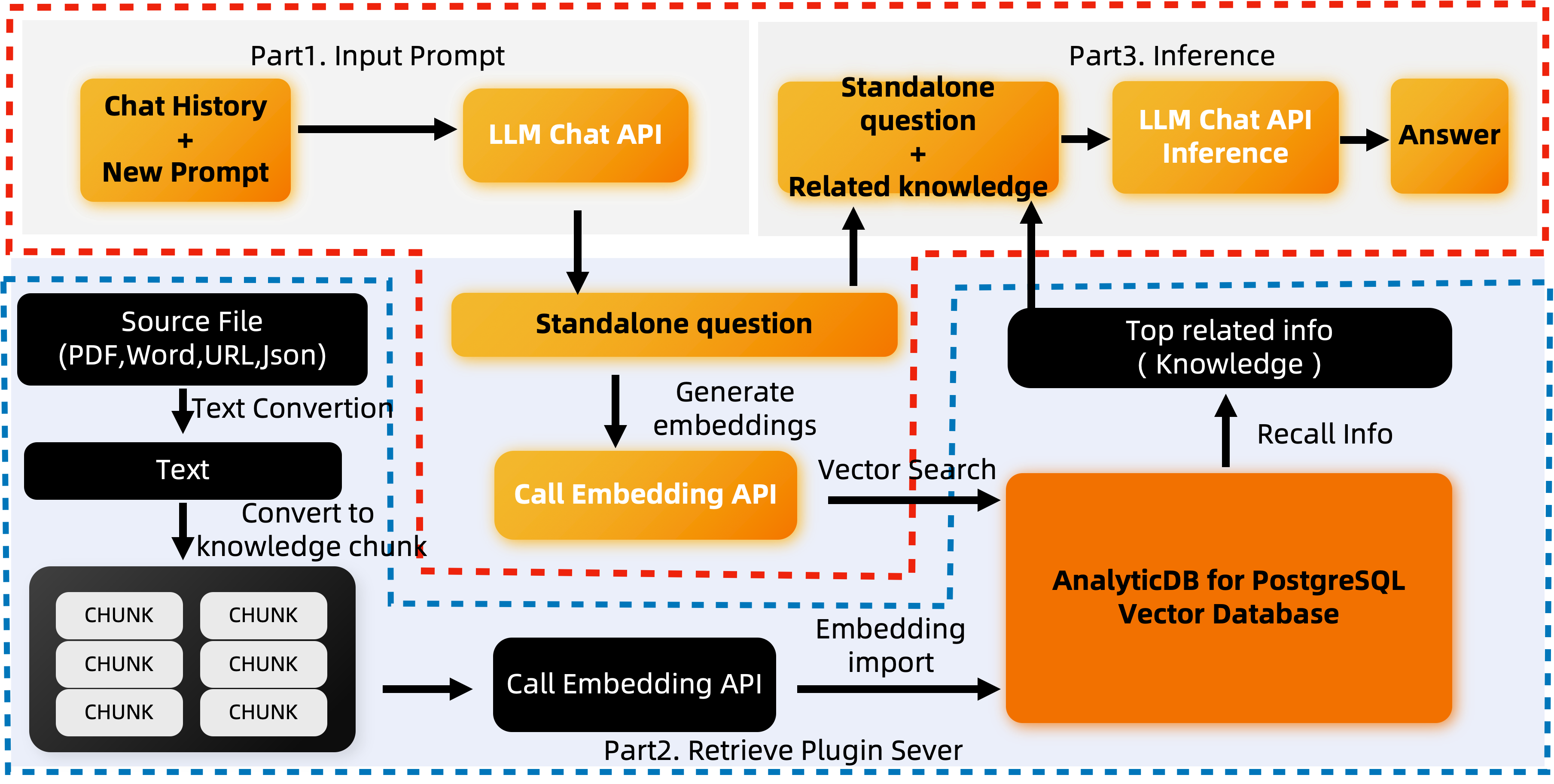

The Local QA System mainly combines the reasoning ability of LLM with the storage and retrieval ability of a vector database. This approach retrieves the most relevant semantic fragments through vectors and then allows the large language model to combine the relevant contextual fragments to reach a correct conclusion. The system involves two main processes:

The underlying layer of this system depends mainly on two modules:

The black portion in the figure above illustrates the backend data processing, which involves embedding the raw data and storing it in the AnalyticDB for PostgreSQL vector database along with the raw data. In particular, note the blue dotted box in the figure, which represents the black processing module and AnalyticDB for PostgreSQL vector database.

● Step 1: Extract all the text content from the original document. Then, cut the text into multiple chunks based on semantics, where each chunk can sufficiently express a complete meaning. In this process, additional actions such as metadata extraction and sensitive information detection can also be performed.

● Step 2: Feed these chunks into the embedding model to obtain the embedding of each chunk.

● Step 3: Save the embedding and the original chunk in the vector database.

This process involves three main steps:

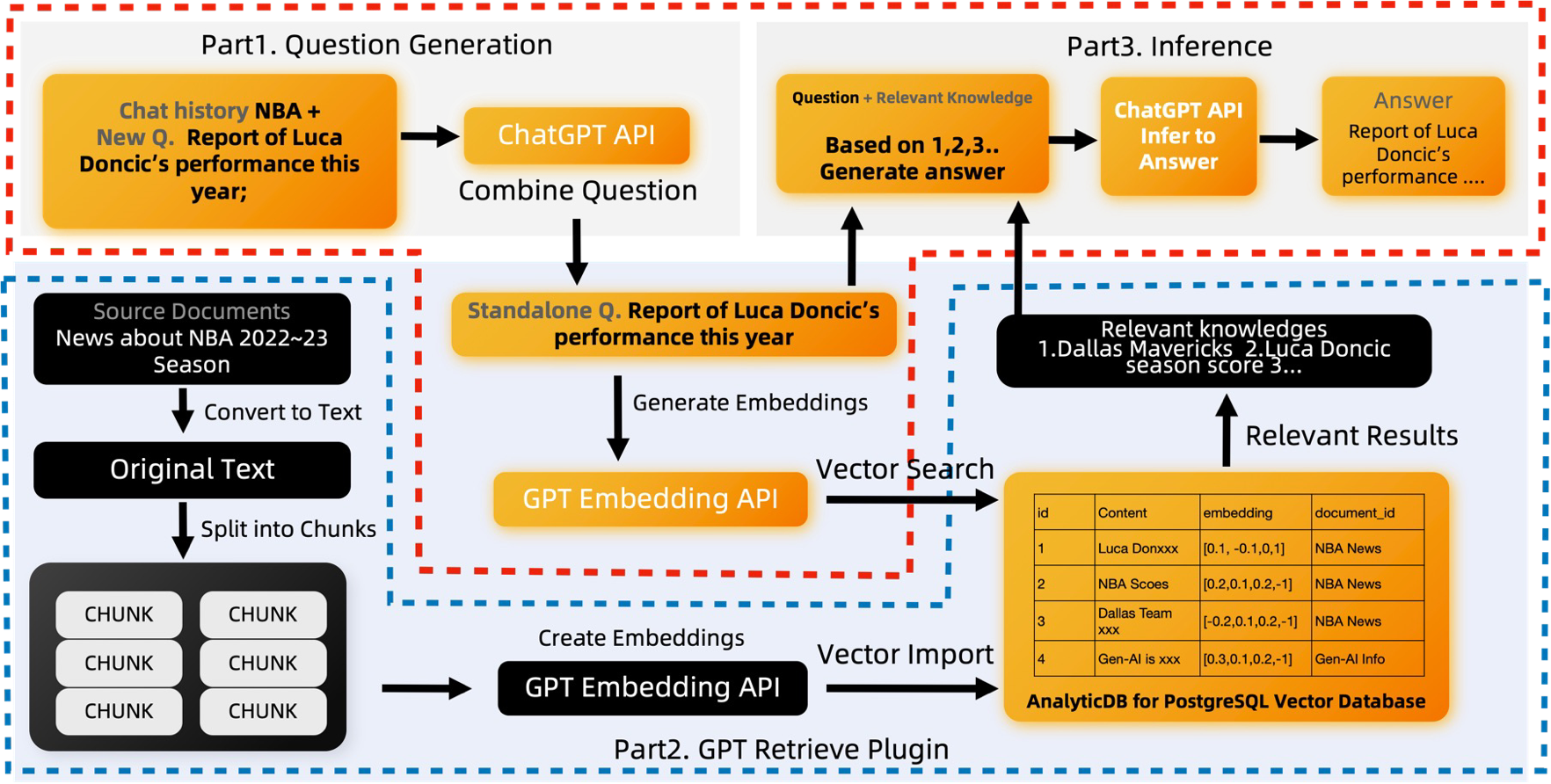

Here, we should focus on the orange portion. The principle alone may be obscure, so let's use an example to illustrate it.

This part is optional and exists because some problems are context-dependent. LLM may not understand the user's intention with new questions. For example, if the user's new question is "What can it do," LLM may not know who or what "it" refers to. To deduce the independent question "What can Tongyi do," LLM needs to combine the previous chat history, such as "What is Tongyi?" LLM cannot correctly answer vague questions like "What's the use of it," but it can correctly answer independent questions like "What's the use of Tongyi?" If your question is independent, you do not need this part.

After obtaining the independent question, we can obtain its embedding. Then, we can search for the most similar vector in the vector database to find the most relevant content. This behavior is part of the functionality of Part 2. Retrieval Plugin.

The function of obtaining the embedding for the independent question is performed by the text2vec model. After obtaining the embedding, we can use it to search for data that has been stored in the vector database in advance. For example, suppose we have stored the following content in AnalyticDB for PostgreSQL: "Tongyi is...," and "Tongyi can help us xxx." We can obtain the closest content or knowledge, such as the first and third items, using the obtained vector.

| id | Content | embedding | document |

| 1 | Tongyi is | [0.1, -0.1,0,1] | LLM Tongyi |

| 2 | NBA Scores Los Angeles Lakers - Brooklyn Nets | [0.2,0.1,0.2,-1] | NBA Today |

| 3 | Tongyi can help us xxx | [-0.2,0.1,0.2,-1] | LLM Tongyi |

| 4 | Generative AI is xxx | [0.3,0.1,0.2,-1] | Generative AI Introduction |

Once we have the most relevant knowledge, we can allow LLM to reason and solve based on the most relevant knowledge and independent questions to get the final answer. Here is the combination of "Tongyi is...", "Tongyi can help us xxx", and other most effective information to answer the question "What is the use of Tongyi". Finally, the GPT's inference solution:

Answer the user's questions concisely and professionally based on the following known information.

If you can't get an answer from it, say "The question cannot be answered based on known information" or "Not enough relevant information was provided". Do not add make-up part in the answer.

Use Chinese in the answer.

已知内容:

1.阿里巴巴“通义千问”是...

2.通义千问可以帮助我们xxx

问题:

通义千问有什么用Why is AnalyticDB for PostgreSQL suitable as a knowledge database for Chatbot? AnalyticDB for PostgreSQL is a cloud-native data warehouse with large-scale parallel processing capabilities. It supports both row store and column store modes, which enables not only high-performance offline data processing but also high-concurrency online analysis and querying of large amounts of data. Therefore, we can say that AnalyticDB for PostgreSQL is a data warehouse platform that supports distributed transactions and mixed workloads, as well as handling various unstructured and semi-structured data sources. For instance, the vector retrieval plug-in implements high-performance vector retrieval and analysis of unstructured data such as images, languages, videos, and texts, while the full-text retrieval plug-in implements analysis of semi-structured data such as JSON.

Thus, in the generative AI scenario, AnalyticDB for PostgreSQL can serve as a vector database to meet the requirements of vector storage and retrieval, as well as structured data storage and querying. Moreover, it can provide full-text retrieval capabilities, providing an all-in-one solution for business applications in the generative AI scenario. Next, we will describe the capabilities of AnalyticDB for PostgreSQL in detail in terms of vector retrieval, integrated retrieval, and full-text retrieval.

The AnalyticDB for PostgreSQL vector retrieval and integrated retrieval functions were first launched on the public cloud in 2020. The AnalyticDB for PostgreSQL vector database is inherited from the data warehouse platform, so it has almost all the benefits of a DBMS, such as ANSI SQL, ACID transactions, high availability, failure recovery, time point recovery, programmability, scalability, and more. At the same time, it supports vector similarity search using dot product distance, Hamming distance, and Euclidean distance. These functions are widely used in commodity recognition and text-based semantic search. With the growth of generative AI, these features have laid a solid foundation for text-based Chatbots. In addition, the AnalyticDB for PostgreSQL vector search engine also uses Intel SIMD instructions to efficiently achieve vector similarity matching.

To illustrate how AnalyticDB for PostgreSQL vector retrieval and integrated retrieval are used, let's consider a specific example. Suppose there is a text knowledge base that divides a batch of articles into chunks and converts them into embedding vectors. The chunks table contains the following fields:

| Field | Type | Description |

| id | serial | Number |

| chunk | varchar(1024) | Text chunks after the article is divided. |

| intime | timestamp | The storage time of the article. |

| url | varchar(1024) | Link to the article to which the text chunk belongs. |

| feature | real[] | Text chunk embedding vector. |

Then the corresponding DDL for table creation is as follows.

CREATE TABLE chunks(

id serial primary key,

chunk varchar(1024),

intime timestamp,

url varchar(1024),

feature real[]

);You need to create a vector index to speed up vector retrieval.

CREATE INDEX ON chunks USING ann(feature) WITH (dim=1536);You can create an index for common structured columns to speed up integrated queries of vectors and structured data.

CREATE INDEX ON chunks(intime);When inserting data, we can directly use the insert syntax in SQL.

INSERT INTO chunks values (default,'xxx','2023-05-04','aaa.bbb.ccc/xxx.pdf',

ARRAY[0.1,0.2,0.1,0.3,……0.9]);In this example, if we want to search for its source article by text, then we can search directly through vector retrieval. The specific SQL is as follows.

SELECT id,chunk,intime,url FROM chunks

ORDER BY

feature <-> array[10,2.0,…,1536.0]

LIMIT 100;Similarly, if we require to find the source articles of a certain text within the last month. Then, we can directly search through integrated search, the specific SQL is as follows.

SELECT id, chunk, intime, url FROM chunks WHERE

intime > '2023-04-01' AND intime <= '2023-05-01'

ORDER BY

feature <-> array[10,2.0,…, 1536.0]

LIMIT 100;After reviewing the example above, it is evident that using vector retrieval and integrated retrieval in AnalyticDB for PostgreSQL is as straightforward as using traditional databases, without any learning threshold. At the same time, we have made many targeted optimizations for vector retrieval, such as vector data compression, vector index parallel construction, vector multi-partition parallel retrieval, and more, which are not described in detail here.

AnalyticDB for PostgreSQL also provides rich full-text search capabilities, such as complex combination conditions and result ranking. For Chinese datasets, AnalyticDB for PostgreSQL also supports Chinese word segmentation, which can efficiently and custom process Chinese text. Additionally, AnalyticDB for PostgreSQL supports the use of indexes to accelerate the performance of full-text search and analysis. These capabilities can be fully utilized in generative AI business scenarios by performing two-way recall on knowledge base documents in combination with the preceding vector retrieval and full-text retrieval capabilities.

The knowledge database search function includes traditional keyword full-text retrieval and vector character retrieval. Keyword full-text retrieval ensures query accuracy, while vector character retrieval provides generalization and semantic matching, recalls knowledge of semantic matching in addition to literal matching, reduces the non-result rate, provides a richer context for large models, and is conducive to summarizing large language models.

Based on the information presented in this article, we can compare a knowledgeable Chatbot to an adult, while a large language model can be seen as the knowledge and learning reasoning ability that the Chatbot acquired from all books and public information in various fields before graduating from college. Therefore, based on the LLM, Chatbot can answer relevant questions until it graduates. However, questions that involve specific professional fields (relevant materials are proprietary to the enterprise) or newly emerged species concepts (not yet born at the time of graduation) cannot be dealt with solely by relying on the knowledge obtained from school (corresponding to the pre-trained LLM). Instead, it needs continuous access to new knowledge after graduation (such as work-related professional learning database), combined with their learning reasoning ability, to make a professional response.

Similarly, Chatbot needs to combine the learning and reasoning capabilities of large language models with an all-in-one database such as AnalyticDB for PostgreSQL that contains vector retrieval and full-text retrieval capabilities (storing enterprise proprietary knowledge and latest knowledge documents and vector characters), enabling it to provide more professional and timely answers based on the knowledge contained in the database.

Check the GitHub page to learn more: https://github.com/openai/openai-cookbook/tree/main/examples/vector_databases/analyticdb

Try AnalyticDB for PostgreSQL for free:

https://www.alibabacloud.com/product/hybriddb-postgresql#J_5764451150

The "Large Language Model (LLM)" mentioned in this article come from third parties (collectively referred to as "third-party models"). Alibaba Cloud cannot guarantee the compliance and accuracy of third-party models and assumes no responsibility for third-party models, or for your behavior and results of using third-party models. Therefore, proceed with caution before you visit or use third-party models. In addition, we remind you that third-party models come with agreements such as "Open Source License" and "License", and you should carefully read and strictly abide by the provisions of these agreements.

How to Achieve "All-in-One Full-Text Search" through Cloud-Native Data Warehouses

Alibaba Cloud Community - January 4, 2024

Alibaba Cloud Community - September 6, 2024

Alibaba Cloud Community - August 18, 2023

Alibaba Cloud Data Intelligence - June 20, 2024

Alibaba Cloud Community - October 31, 2023

ApsaraDB - December 21, 2023

Tongyi Qianwen (Qwen)

Tongyi Qianwen (Qwen)

Top-performance foundation models from Alibaba Cloud

Learn More Alibaba Cloud for Generative AI

Alibaba Cloud for Generative AI

Accelerate innovation with generative AI to create new business success

Learn More AI Acceleration Solution

AI Acceleration Solution

Accelerate AI-driven business and AI model training and inference with Alibaba Cloud GPU technology

Learn More AnalyticDB for PostgreSQL

AnalyticDB for PostgreSQL

An online MPP warehousing service based on the Greenplum Database open source program

Learn MoreMore Posts by ApsaraDB

Start building with 50+ products and up to 12 months usage for Elastic Compute Service

Get Started for Free Get Started for Free